Faster System Copies with Indexes Built for BDLS

| SAP Basis — Jeremy Monin | Updated March 2015 |

Introduction

This article is about reducing the time it takes to do an SAP system copy,

by focusing on one particular process which often has a very long runtime.

I've used this technique to run system copies substantially faster, saving 16 hours from what was a 30-hour process.

It's simpler than other speed-up methods out there, and from the SAP system's point of view it's no different from the slow usual process.

This article is about reducing the time it takes to do an SAP system copy,

by focusing on one particular process which often has a very long runtime.

I've used this technique to run system copies substantially faster, saving 16 hours from what was a 30-hour process.

It's simpler than other speed-up methods out there, and from the SAP system's point of view it's no different from the slow usual process.

During an SAP system copy, a lot of time can be spent waiting for the logical system renames (BDLS jobs)

in the target system receiving the copy.

It's important for these to run through every table, but the indexes usually aren't optimized to search

by the logical system name field.

Typically a production system is connected to several other landscapes, so BDLS has to run several times

during the copy.

By choosing tables that BDLS spends a lot of time on, and making

temporary indexes on those tables, BDLS can run a lot quicker by scanning the index instead of reading

every row in the table. Often this field is blank in large tables in typical systems, and with

a temporary index the job can quickly move on to the next table.

By creating the indexes once, instead of reading through all the tables for each BDLS, the time

savings are multiplied.

The first time going through it, this process takes some extra work and analysis.

After that all you'll need to do for each system copy is run a SQL script to create the indexes.

Since the method shown here makes changes only at the database level, there's no need to do anything differently

than usual within the ABAP system.

There are other complicated methods out there to speed up BDLS, or break it up to run in parallel by

bypassing its built-in safety checks. That's all avoided here.

The basic steps are:

- Identify tables that BDLS spends a lot of time on

- Determine key fields to create temporary indexes

- Write a script to create the temporary indexes, and another to remove them

- If you have a sandbox and time, do timing runs before and after using the scripts to refine them

- During your next system copy, create and use the indexes, then monitor where BDLS spends its time

- Refine the script by adding indexes on more tables, if more time can be saved in future copies

I'll go into more detail on each step.

1. Identify tables that BDLS spends a lot of time on

There are a few ways to identify tables that might benefit from these indexes, with varying accuracy:

- The best way is to monitor BDLS while it runs on QA or another system copied from production.

If you don't have a system copy scheduled, you can still run BDLS with unused source and target source system names,

which will make no data changes and monitor where BDLS spends its time.

I've written a Linux/Unix script you can schedule every few minutes to take text snapshots that look like SM50, and another to run afterwards to count the number of times each table appears

and measure where BDLS spends its time. See step 4 for details.

- If you don't have a system where you can do test runs of BDLS, perhaps you have a system that was recently

copied. Check job logs in SM37 for

RBDLS* (clear the date range selection fields).

If the spool output for those jobs are still available,

they will show the number of rows counted and changed for each table.

- If you don't have those job runs and spool output available, there is one more place to check.

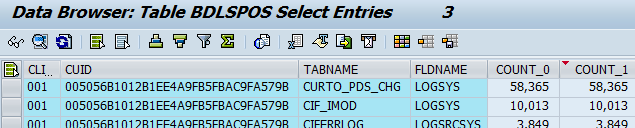

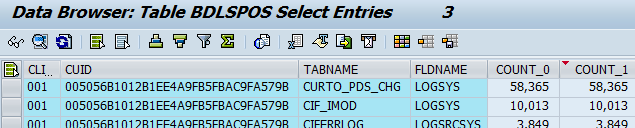

If you have an SAP system that's recently had a system copy, such as a QA or sandbox system, check the BDLSPOS table

that stores BDLS statistics and is used to generate parts of the BDLS spool output:

In SE16 selection criteria, choose COUNT_1 >= 1000, then sort descending. This shows the with the number of rows changed for each table. Note this won't show you any tables where BDLS spent a lot of time but found nothing to change.

- If none of that data is available, you probably won't be able to pick good tables for BDLS temporary indexes.

You could try to look through the list of the largest tables in your system which have logical system fields which aren't indexed.

This doesn't have the best chance of success, but might be worth considering if you want to find a few tables to try the process

during your next system copy, monitor BDLS at that time, and improve from there.

2. Determine key fields to create temporary indexes

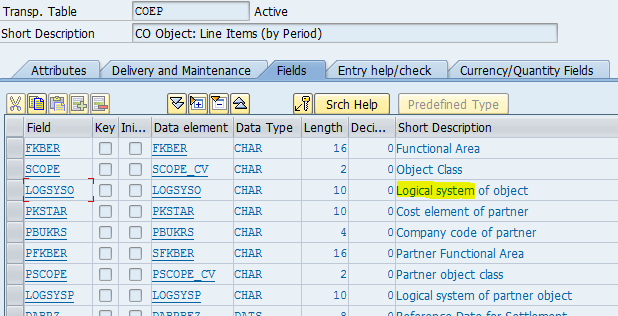

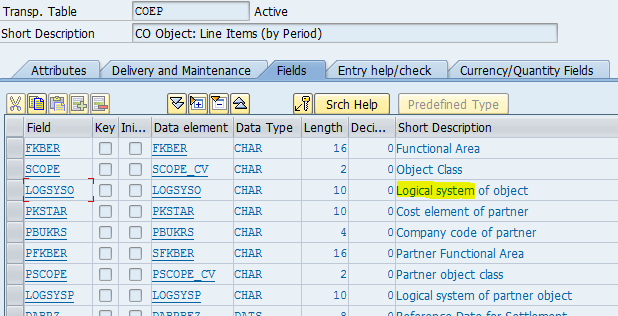

Typically you'll need the CLIENT or MANDT field and the logical system name field shown in SE11.

If you have BDLSPOS table entries from a previous system copy, that will contain each table's logical system field name.

You can also get them while BDLS is running; SM50 or DB02 can drill in and show the SQL statement.

Determine these fields for each identified table.

While in SE11 view the table's current indexes, then pick an unused name like Z1 for the temporary index.

3. Write a script to create the temporary indexes, and another to remove them

With the information from step 2, you can write scripts to create or remove the indexes.

You'll want to analyze to give your database good statistics for the new indexes.

You probably also want to include the table owner name (SQL Server) or schema and tablespace names (Oracle).

Here's a create example on Oracle for one table:

create index SAPSR3."COEP~Z1" on SAPSR3."COEP" (MANDT, LOGSYSO) nologging tablespace PSAPSR3;

analyze index SAPSR3."COEP~Z1" estimate statistics sample 2 percent;

For COEP in particular you may also want an index on LOGSYSP, its other logical system field.

4. If you have a sandbox and time, do timing runs before and after using the scripts to refine them

You'll want to quantify how much time you've saved, and tune if it's not what you expected.

A sandbox won't have production-level performance, but you can see whether you're closer to

saving 5% or 50% of the original time.

Here is a linux/unix script to take SM50-like snapshots while BDLS runs, and a second script to count the number of times each table appears.

sm50-dpmon.csh:

#!/bin/csh

# work process quick view: check SM50, save to timestamped filename

# Conditions:

# - Run as sidadm or root

# - Directory ~sidadm/sm50 must exist and writable

# - Run regularly from cron or manually

# Sample crontab entry:

# 01,04,07,10,13,16,19,22,25,28,31,34,37,40,43,46,49,52,55,58 * * * * su - sbxadm -c /home/sbxadm/sm50.csh # temp - sap sm50 work process info

# Parameters:

set SID=SBX

set usr=sbxadm

set pro=SBX_DVEBMGS00_erpsbxci01

# Be sure to also check the shell path on the first line /bin/csh

# as some unixes require /usr/bin/csh instead

# Code begins.

rm -f ~$usr/sm50/latest 2>/dev/null

cd /sapmnt/$SID/profile && \

/usr/sap/$SID/SYS/exe/run/dpmon -i -s l pf=$pro > ~$usr/sm50/latest

if ( ! -z ~$usr/sm50/latest ) then

set newdate=`date "+%Y%m%d-%H%M"`

cd ~$usr/sm50 && mv latest sm50-$SID-$newdate # sm50-SBX-20080312-1739

endif

exit 0

5. During your next system copy, create and use the indexes, then monitor where BDLS spends its time

The index creation SQL script may take a while, so start that as early as you reasonably can, and let it run while you do other work on the system copy. For best results you shouldn't start BDLS before the indexes builds are done.

You can use the script from step 4 to monitor per-table timing for BDLS, or just manually check every so often and note the tables that BDLS takes a long time on. Use SM50 or DB02 to check the SQL statements and explain plans, if you want to be sure the the database is using an index and not an inefficient full table scan.

6. Refine the script by adding indexes on more tables, if more time can be saved in future copies

For continuous improvement, the techniques shown above can measure BDLS performance on future system copies or test runs

that use the new indexes. Consider adding indexes for new tables when they would save time overall, considering the additional time spent building the index.

Conclusion

To sum up, temporary indexes could make your next system copy run much faster without adding a lot of complexity.

I'd like to hear your results. Please send me your comments or questions at jxmonin@gmail.com.

Thank you for taking the time to read this guide.

Copyright (C) 2015 Jeremy D Monin.

Licensed CC BY-SA 4.0.

Stopwatch image is adapted (faded) from

'Stopwatch' by William Warby

(licensed CC BY 2.0)

retrieved 2015-03-04.

This article is about reducing the time it takes to do an SAP system copy,

by focusing on one particular process which often has a very long runtime.

I've used this technique to run system copies substantially faster, saving 16 hours from what was a 30-hour process.

It's simpler than other speed-up methods out there, and from the SAP system's point of view it's no different from the slow usual process.

This article is about reducing the time it takes to do an SAP system copy,

by focusing on one particular process which often has a very long runtime.

I've used this technique to run system copies substantially faster, saving 16 hours from what was a 30-hour process.

It's simpler than other speed-up methods out there, and from the SAP system's point of view it's no different from the slow usual process.